Speech Enhancement with Neural Homomorphic Synthesis Online Samples

Authors:

Wenbin Jiang, Zhijun Liu, Kai Yu, Fei Wen

Abstract:

Most deep learning-based speech enhancement methods operate directly on time-frequency representations or

learned features without making use of the model of speech production. This work proposes a new speech

enhancement method based on neural homomorphic synthesis. The speech signal is firstly decomposed into

excitation and vocal track with complex cepstrum analysis. Then, two complex-valued neural networks are

applied to estimate the target complex spectrum of the decomposed components. Finally, the time-domain

speech signal is synthesized from the estimated excitation and vocal track. Furthermore, we investigated

numerous loss functions and found that the multi-resolution STFT loss, commonly used in the TTS vocoder,

benefits speech enhancement. Experimental results demonstrate that the proposed method outperforms existing

state-of-the-art complex-valued neural network-based methods in terms of both PESQ and eSTOI.

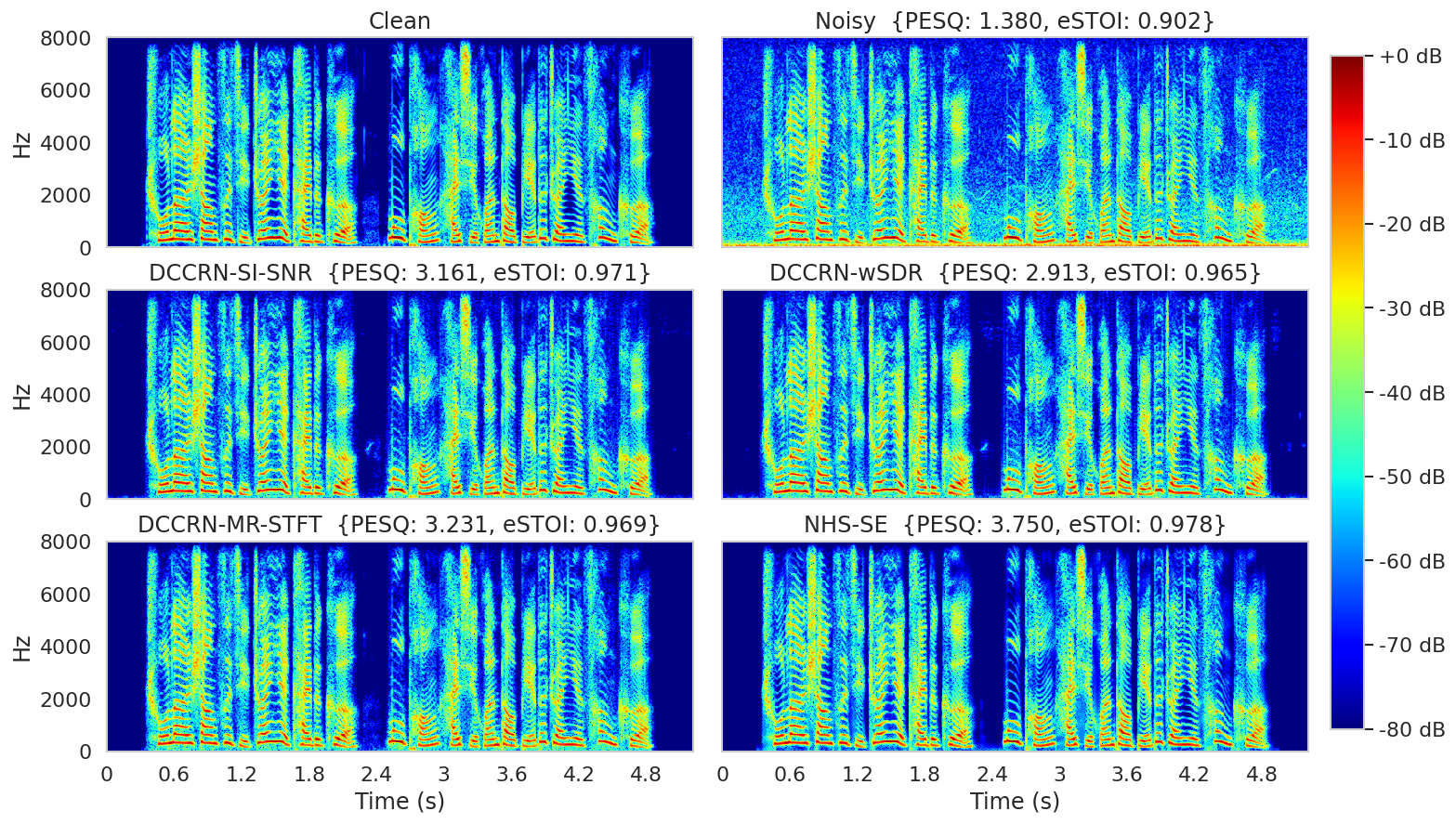

Seen Noise

| Model\Noise | OMEETING | DKITCHEN | OMEETING | PCAFETER | TBUS |

|---|---|---|---|---|---|

| Clean | |||||

| Noisy | |||||

| DCCRN-SI-SNR | |||||

| DCCRN-wSDR | |||||

| DCCRN-MR-STFT | |||||

| NHS-SE |

Spectrogram of the samples in first column

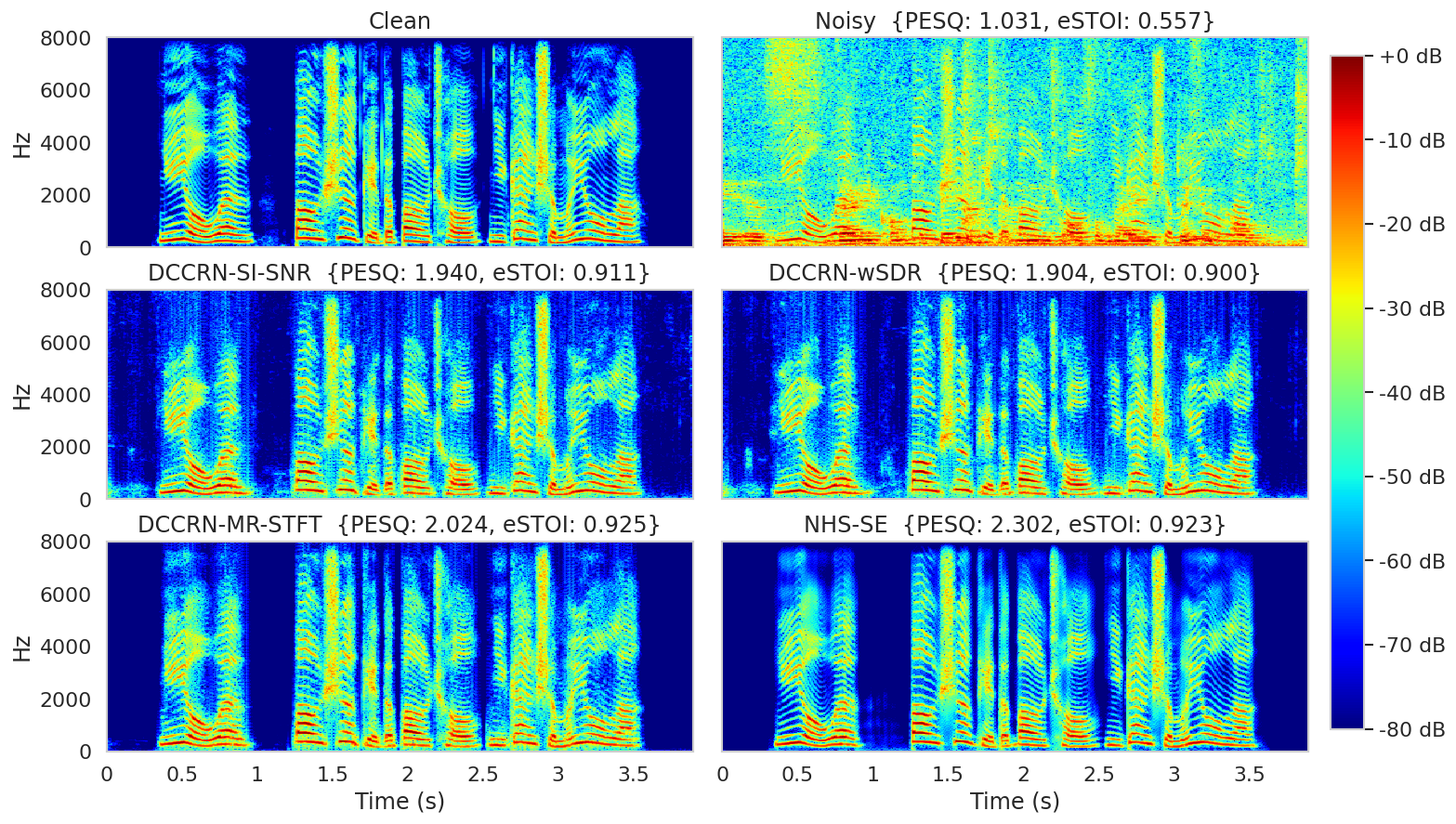

Unseen Noise

| Model\Noise | NFIELD | NPARK | NRIVER | SCAFE | SPSQUARE |

|---|---|---|---|---|---|

| Clean | |||||

| Noisy | |||||

| DCCRN-SI-SNR | |||||

| DCCRN-wSDR | |||||

| DCCRN-MR-STFT | |||||

| NHS-SE |

Spectrogram of the samples in first column